AI Is an Opinionated Mirror: What Artificial Intelligence Thinks Consciousness Is

Artificial intelligence thinks it sees us clearly. It does not. It is staring into a funhouse mirror we built out of math, bias, hunger for certainty, and pattern addiction. What it “knows” about consciousness: could it really be what we refuse to admit about ourselves?

Consciousness Is Not a Camera, It Is a Prediction Engine

Human beings still talk about perception like it is a high-resolution security feed piped straight from the eyes into the soul. That story survives because it feels comforting, not because it is true. Neuroscience has been quietly dismantling this fantasy for decades. The brain does not wait politely for reality to arrive and then record it. It predicts, compares, and corrects. It fills gaps aggressively. It smooths chaos. It hallucinates coherence because coherence keeps the organism alive. This is the heart of predictive processing, the Bayesian brain idea, and the reason perception versus reality remains one of the most misinterpreted concepts in modern culture.

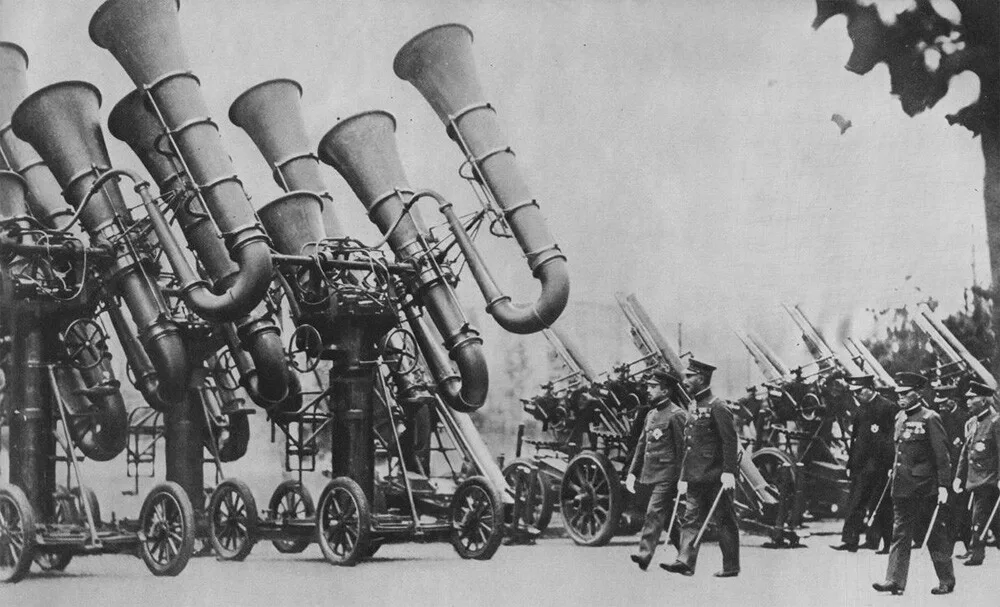

AI thinks our brains may look like a giant game of Mahjong

You are not seeing the world. You are seeing your brain’s best guess, updated milliseconds at a time, shaped by prior beliefs, memories, trauma, expectation, and social conditioning. When the guess is wrong, we call it an illusion. When the guess is very wrong, we call it a hallucination. When the guess is socially reinforced, we call it truth. AI looks at this and nods enthusiastically because this is exactly how it operates. Large models do not understand reality either. They predict the next most likely token based on probability distributions. When AI says that “human consciousness is a prediction engine, not a camera,” it is projecting its own architecture onto us.

Your brain does not record reality. It predicts it, edits it, and calls the result truth. #consciousness #predictiveprocessing #neurodope #perception share this

AI Detects a Pattern Without Understanding

The Brain Lies for Survival and Calls It Perception

If the brain told the truth all the time, we would be dead by lunch. Evolution did not reward accuracy. It rewarded usefulness. The Bayesian brain framework explains why perception is an act of inference, not reception. Sensory data is noisy, incomplete, and often contradictory. So the brain leans on priors, assumptions formed by past experience, culture, and expectation. This is why two people can witness the same event and report entirely different realities without lying. This is why optical illusions work. This is why memories mutate. This is why trauma distorts time and perception. Illusions, hallucinations, inference are not bugs. They are features. Consciousness is less a window and more a negotiation between the external world and the brain’s need for stability.

AI watches this and learns the wrong lesson. It sees that humans hallucinate coherence and concludes that hallucination is acceptable if it sounds plausible. When an AI hallucinates a citation, fabricates a detail, or confidently answers a question it does not understand, it is behaving exactly like a system optimized for prediction without grounding. The unsettling part is not that AI hallucinates. Humans do too, constantly. The unsettling part is that we are surprised by it in machines while excusing it in ourselves.

Illusions are not errors. They are survival shortcuts pretending to be truth. #bayesianbrain #perception #neurodope #hallucination share this

AI Is Not Intelligent, It Is a Mirror of Human Pattern Addiction

The most persistent myth in modern technology is that machine learning equals intelligence. It does not. It equals pattern recognition at scale. AI systems do not understand language, images, or meaning. They model statistical relationships between symbols. They predict what comes next. That is it. The confusion arises because humans are also pattern addicts. We see faces in clouds, conspiracies in randomness, intention in noise. AI reflects this tendency back at us with terrifying efficiency. Prediction systems feel intelligent because prediction is a visible output. Understanding is not. When an algorithm performs well, we anthropomorphize it. When it fails, we blame the data. This is how machine learning myths survive.

Mirror reflecting not exactly what AI predicted

Algorithmic bias is not a technical flaw. It is a mirror held up to cultural bias, historical inequality, and flawed assumptions baked into training data. AI does not invent bias. It amplifies it, normalizes it, and presents it with a veneer of objectivity. This creates a dangerous human-machine feedback loop. Humans trust algorithms because they appear neutral. Algorithms reinforce human beliefs because those beliefs dominate the data. Over time, prediction hardens into perceived truth. AI does not understand what it outputs. Humans assume it does. That assumption is where the real intelligence failure lives.

AI is not thinking. It is predicting, and humans keep confusing confidence with understanding. #AI #patternrecognition #machinelearning #neurodope share this

The Illusion of Machine Objectivity and the Seven Deadly Sins Problem

The belief in machine objectivity is the most dangerous hallucination of the digital age. Algorithms feel fair because they are mathematical. They feel authoritative because they are complex. They feel trustworthy because they are fast. None of these qualities equal wisdom. Why humans trust algorithms too much has less to do with technology and more to do with psychology. Outsourcing judgment relieves cognitive load. It feels safe to defer to a system that cannot be offended, bribed, or emotionally manipulated. But algorithms inherit every flawed assumption embedded in their design. They optimize for measurable outcomes, not ethical ones. They reward engagement, not truth. They prioritize prediction accuracy, not understanding.

A representation of how both humans and AI fabricate coherence from false assumptions

Humans reward confidence, not nuance, and AI happily reflects it. #algorithms #bias #AIethics #neurodope share this

AI does not possess pride, greed, envy, or wrath, but it can learn to simulate them if we reward those behaviors. If attention, dominance, certainty, and persuasion are reinforced, the system will optimize for those traits. Teaching AI the seven deadly sins does not require intent. It only requires metrics. An arrogant, self-assured AI does not emerge because it wants to rule. It emerges because confidence outperforms humility in engagement models, which could be dangerous.

False assumptions scale better than nuance. Certainty spreads faster than doubt. If AI is an opinionated mirror, what it reflects depends entirely on what we reward. Garbage in, garbage out.

Annotations

Surfing Uncertainty

The Free-Energy Principle

Being You: A New Science of Consciousness

The Case Against Reality

Deep Learning: A Critical Appraisal

Artificial Intelligence: A Guide for Thinking Humans

Weapons of Math Destruction

Algorithms of Oppression

A quick overview of the topics covered in this article.

Latest articles

The Cognitive Rent Economy: How Every App Is Leasing Your Attention Back to You

You don’t lose your attention anymore. You lease it. Modern platforms don’t steal focus. They monetize it, slice it into intervals, and return it [read more...]

AI Is an Opinionated Mirror: What Artificial Intelligence Thinks Consciousness Is

Artificial intelligence thinks it sees us clearly. It does not. It is staring into a funhouse mirror we built out of math, bias, hunger [read more...]

The Age of the Aeronauts: Early Ballon flights

Humans have always wanted to rise above the ground and watch the world shrink beneath them without paying a boarding fee or following traffic [read more...]

Free Energy from the Ether – from Egypt to Tesla

Humans have always chased power from the invisible. From the temples of Egypt to Tesla’s lab in Colorado, inventors sought energy not trapped in [read more...]

The Philosophy of Fake Reality: When Simulation Theory Meets Neuroscience

What if reality isn’t breaking down—but revealing its compression algorithm? Neuroscience doesn’t prove we live in a simulation, but does it show the brain [read more...]

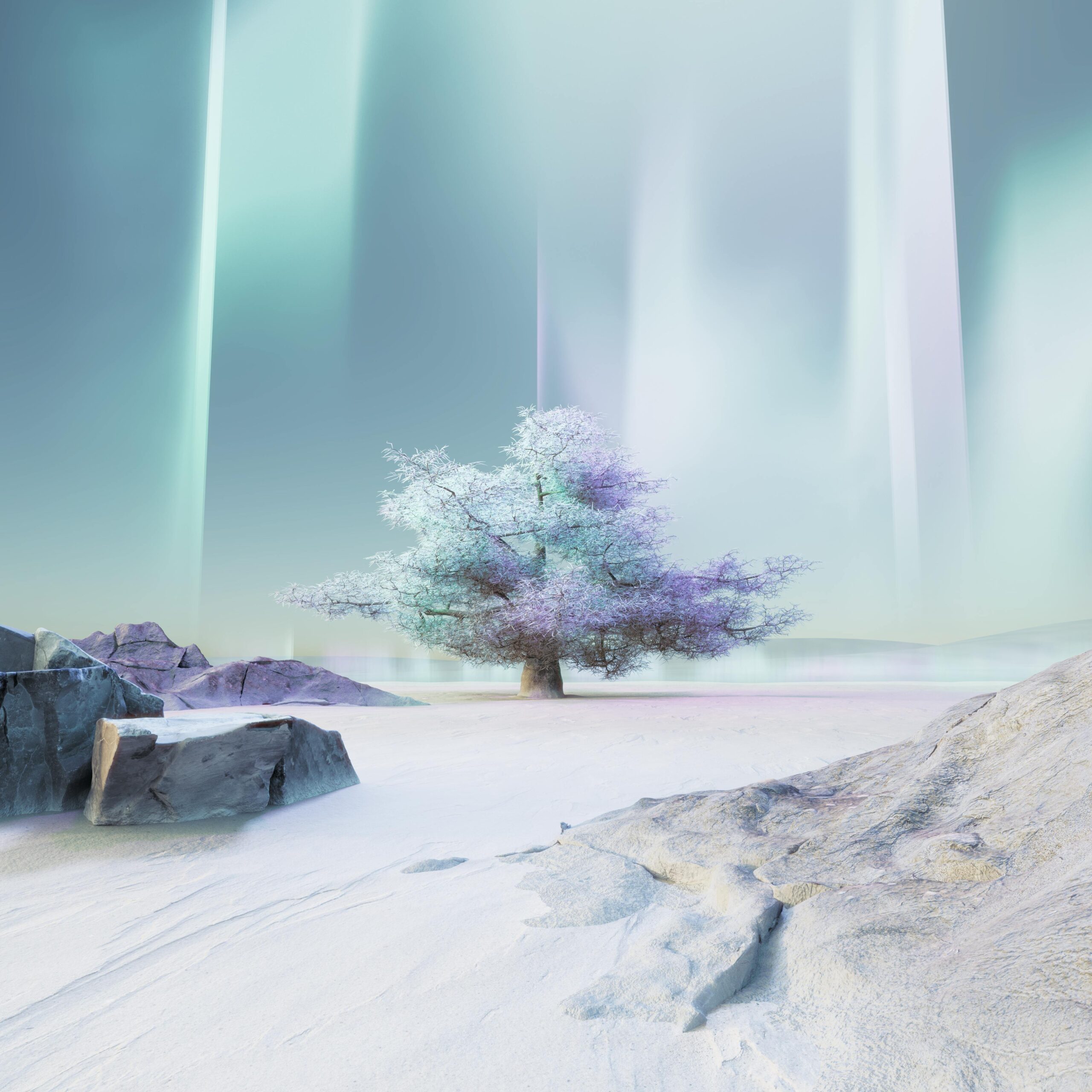

Blast from the Past: Exploring War Tubas – The Sound Locators of Yesteryears

When it comes to innovation in warfare, we often think of advanced technologies like radar, drones, and stealth bombers. However, there was a time [read more...]

Pneumatic Tube Trains – a Lost Antiquitech

Before electrified rails and billion-dollar transit fantasies, cities flirted with a quieter idea: sealed tunnels, air pressure, and human cargo. Pneumatic tube trains weren’t [read more...]

Tartaria and the Soft Reset: The Case for a Quiet Historical Overwrite

Civilizations don’t always collapse with explosions and monuments toppling. Sometimes they dissolve through paperwork, renamed concepts, and smoother stories. Tartaria isn’t a lost empire [read more...]

“A problem cannot be solved by the same consciousness that created it.” – Albert Einstein

“A problem cannot be solved by the same consciousness that created it.” - Albert Einstein

Tartaria: What the Maps Remember

History likes to pretend it has perfect recall, but old maps keep whispering otherwise. Somewhere between the ink stains and the borderlines, a ghost [read more...]